Introduction: The Hype vs. Reality of Generative AI

Generative AI has quickly gone from a buzzword to a business obsession. Tools like ChatGPT and Microsoft Copilot are everywhere, and companies are pouring billions into exploring their potential. Yet, despite the excitement, a shocking statistic from the MIT report keeps making headlines: 95% of enterprise GenAI pilot projects fail to deliver measurable ROI.

At first glance, this sounds like proof that AI is overhyped. But the truth is more complicated and far more interesting. Generative AI itself isn’t the problem. Instead, most failures can be traced back to how organisations adopt and manage it. Let’s unpack why so many pilots end in “AI purgatory,” and what separates the 5% that actually succeed.

The Real Reason Behind Pilot Failures

The “Pilot Purgatory” Trap

Many AI projects don’t collapse because of technical shortcomings - they stall in the pilot phase. Companies run endless experiments that never move into production, creating wasted effort, mounting costs, and declining confidence among both leaders and employees.

This “purgatory” emerges when pilots are treated as isolated experiments rather than steps toward real integration. Without a clear path to scale, even promising initiatives lose momentum and end up as expensive proofs of concept.

The Friction Points That Hold Companies Back

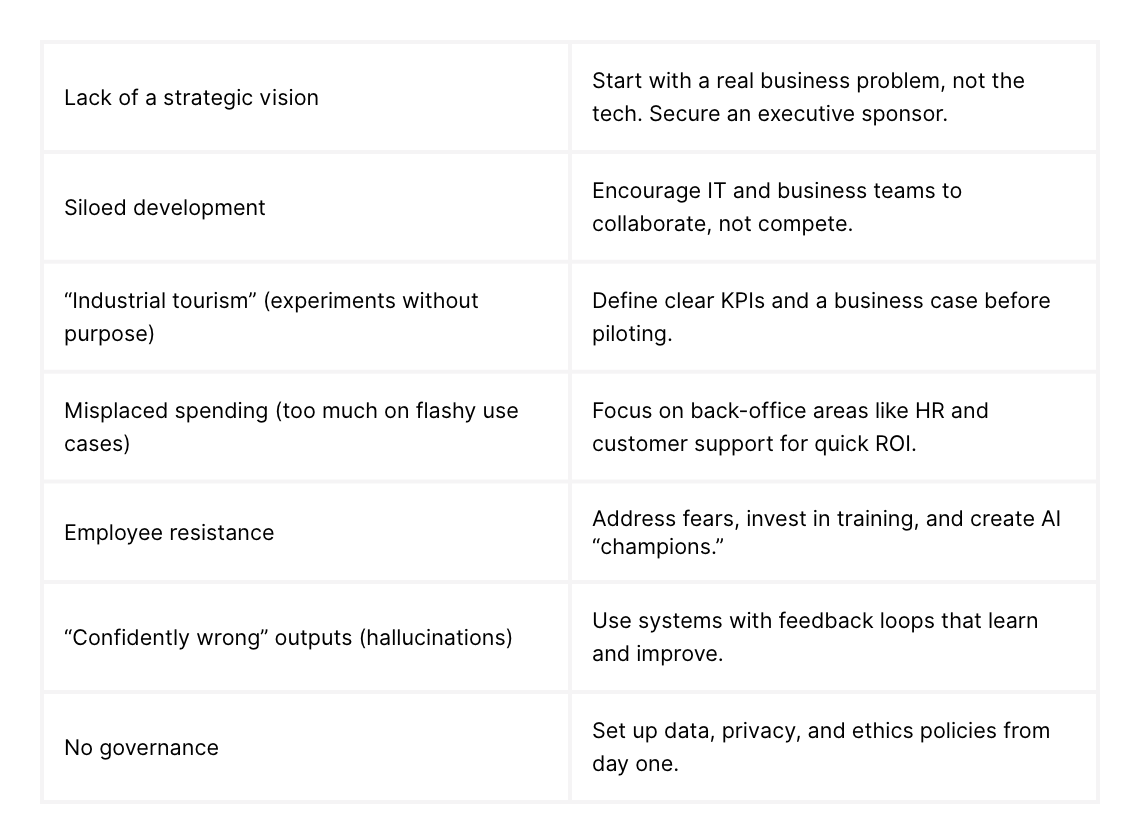

Here are the biggest reasons why GenAI pilots stall:

Breaking It Down: Why These Frictions Arise

1. Strategy Without Alignment

Too often, AI pilots are run by IT or innovation teams without input from business leaders. The result? Cool technology that doesn’t solve real problems. Pilots end up being more like “industrial tourism”. Experiments that look good in a press release but lack business value. As a result, most of the pilots make it out of the boardroom but fail with real world data and usage.

2. The Human Factor

The biggest source of resistance isn’t technical but human. Employees fear AI might replace their jobs, don’t trust its accuracy, or simply don’t want to change their workflows. Some even bypass official tools and use consumer AI apps in secret (“shadow AI”), creating security risks.

Executives, meanwhile, expect instant results. When productivity dips temporarily (a common “J-curve” effect before improvements kick in), they lose patience and pull the plug.

3. Technology & Governance Gaps

Even when projects are well-intentioned, technical issues like hallucinations derail trust. If employees spend more time fact-checking AI outputs than doing the task themselves, any ROI disappears.

On top of that, many organisations lack clear governance frameworks. Without policies around privacy, ethics, and data usage, projects hit regulatory roadblocks; especially in industries like healthcare and finance.

The AI Bubble - Or Just History Repeating Itself?

The rapid surge of investment in AI has led some to warn of an “AI bubble,” echoing the dot-com era. Billions are being spent on pilots, many of which never leave the lab. But when seen in a broader context, this pattern isn’t unique to AI.

Ten years ago in the Digitalisation era, research from Oxford University on more than 1,300 public sector IT projects, revealed that one in five projects suffered cost overruns of more than 25%, and project durations were on average 24% longer than planned. At that time, organisations were still learning how to adopt large-scale IT systems, facing challenges in procurement, infrastructure, and project management.

Today, most companies already run on digital infrastructure, but the complexity lies in layering new technologies like AI on top of existing processes. The risks also follow a “fat-tail” distribution, as Bent Flyvbjerg’s research shows: while many projects miss their targets only slightly, a minority fail catastrophically with extreme overruns in time or cost.

Digital transformation efforts more broadly show similar patterns. Analyses across industries suggest that only 5-30% of programs are considered fully successful. The parallels to GenAI are clear: while enthusiasm drives rapid investment, successful scaling requires maturity, alignment, and patience.

Rather than interpreting the high failure rate as a sign of an “AI bubble,” history suggests we are witnessing a familiar cycle: ambitious investments that test the limits of organisations’ ability to integrate and adapt.

How the Successful 5% Get It Right

The organisations that make it past pilot purgatory don’t have secret AI technology. What they have is a better approach to adoption.

1. Start with the Business Problem

Instead of asking, “Where can we use AI?”, the winners ask, “What’s our biggest pain point?” They focus on problems with measurable outcomes, often in less glamorous but high-ROI areas:

• Customer service: AI-powered chatbots for 24/7 support.

• HR: Automating candidate screening and employee FAQs.

• Software engineering: Code generation and debugging.

• Finance: Fraud detection and report generation.

These functions deliver immediate cost savings and efficiency, building early momentum.

2. Integrate, Don’t Isolate

The best companies don’t ask employees to abandon their tools. Instead, they integrate AI directly into existing systems, making adoption seamless. They also empower frontline managers to own AI projects, creating a network of “AI champions” who advocate for the change.

3. Build Trust Through Governance

Rather than seeing governance as a roadblock, successful companies use it as a trust-building tool. By putting clear rules around data, privacy, and bias, they reassure both employees and regulators. This “trust dividend” allows projects to scale with confidence.

Actionable Takeaways for Beating the Odds

If you want your AI initiative to be in the winning 5%, here’s a simple roadmap:

• Start with impact. Solve a clear business problem with measurable outcomes.

• Secure leadership buy-in. An executive sponsor is critical for momentum.

• Empower employees. Turn frontline workers into AI champions through training and integration.

• Plan for the J-curve. Accept that productivity may dip before gains appear.

• Establish governance early. Data, privacy, and ethics policies must be in place from day one.

How We Approach AI Projects at Predli

Over the years, we’ve seen a common pattern: the pilots that stall are rarely the ones with bad technology - they’re the ones that never connect to real business needs or people’s day-to-day work. That’s why, when we take on a new project, we don’t start with the model or the data. We start with the context: Who will use this? How will it change their work? What’s the outcome that actually matters?

Working this way means the first steps are often slower - more conversations, more mapping, more co-creation with teams. But we’ve learned that it pays off. The moment AI feels like “just another pilot,” momentum is already lost. The moment it feels like a natural extension of how the organisation already works, adoption happens almost on its own.

Not every project is the right project. Some ideas look exciting on paper but won’t create lasting value in practice. That’s why we spend time upfront deciding together with our clients where AI can make a real difference - and sometimes, that means saying no. In our experience, that clarity at the start is what makes scaling possible later.

When it comes to governance, many of our clients already see its importance - it’s often one of the reasons they come to us in the first place. Clear thinking around data, privacy, and ethics isn’t a blocker - it’s what makes people feel safe enough to actually adopt and scale AI.

This approach doesn’t remove the friction of change - nothing does. But it channels it. Friction becomes the signal that something real is happening, not just an experiment. That’s what turns AI from a short-lived pilot into a lasting capability.

Conclusion: The Hidden Opportunity

The headline “95% of GenAI pilots fail” might sound discouraging, but it’s actually a wake-up call. The failure isn’t about the technology but about organisations not being ready for it.

The small minority who succeed prove that with the right strategy, culture, and governance, generative AI can drive massive value. And when seen in the broader context of IT project history, today’s failure rate is less a sign of hype than a reminder of how complex technology integration has always been. For everyone else, the real challenge isn’t “Does AI work?” but “Are we prepared to make it work?”

Generative AI won’t magically fix broken systems. But for companies ready to embrace the friction and adapt, it can be the catalyst for real transformation