Three Paradoxes of AI Adoption

AI is no longer a futuristic promise; it is becoming infrastructure. Two recent reports - OpenAI’s How People Use ChatGPT and Anthropic’s Economic Index - reveal not just how fast adoption is happening, but how uneven, paradoxical, and political this shift is.

Taken together, the reports don’t just show what people do with AI. They show us something more profound: how quickly societies adapt, who gets left behind, and what kind of trust we’re willing to place in machines.

Paradox 1: Adoption is faster than ever - but trust lags behind

Technology usually spreads slowly. It took more than 30 years for electricity to reach 80% of U.S. households. The internet needed more than a decade to move from niche to necessity.

AI is different. Anthropic’s data shows that the share of American workers using AI on the job has doubled in just two years. No other general-purpose technology has ever diffused this quickly.

And yet, when you dig into the data, a hesitation emerges.

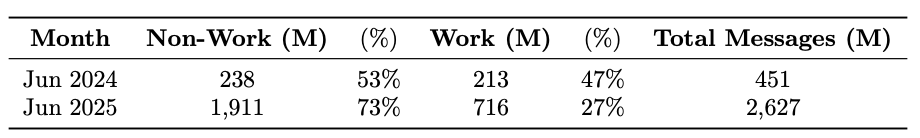

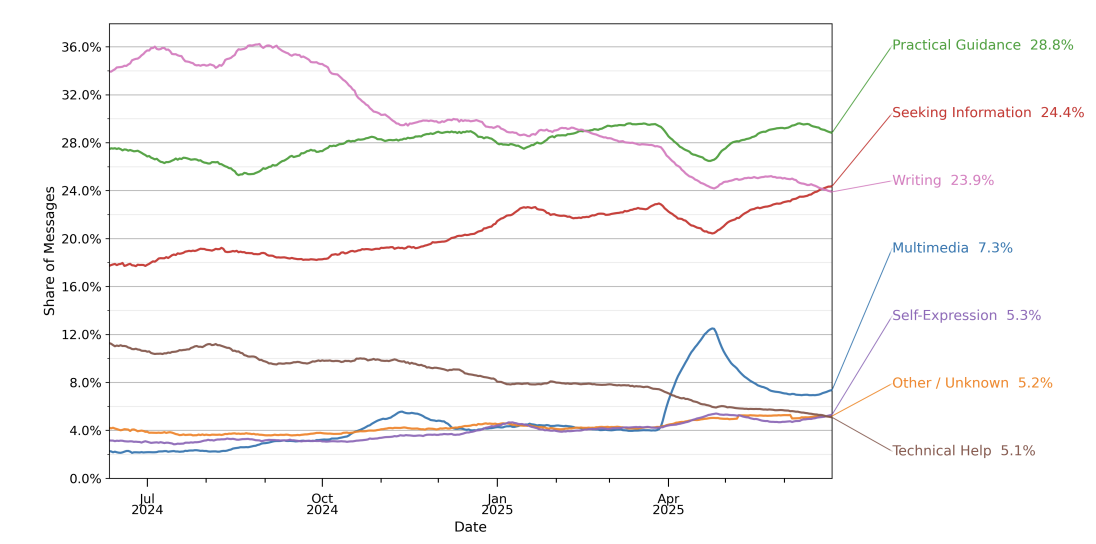

• OpenAI’s findings: Over 70% of ChatGPT use is non-work related. The most common tasks? Writing, information seeking, and practical guidance. In other words: essays, explanations, mail drafting, day-to-day support. Helpful, but low-stakes.

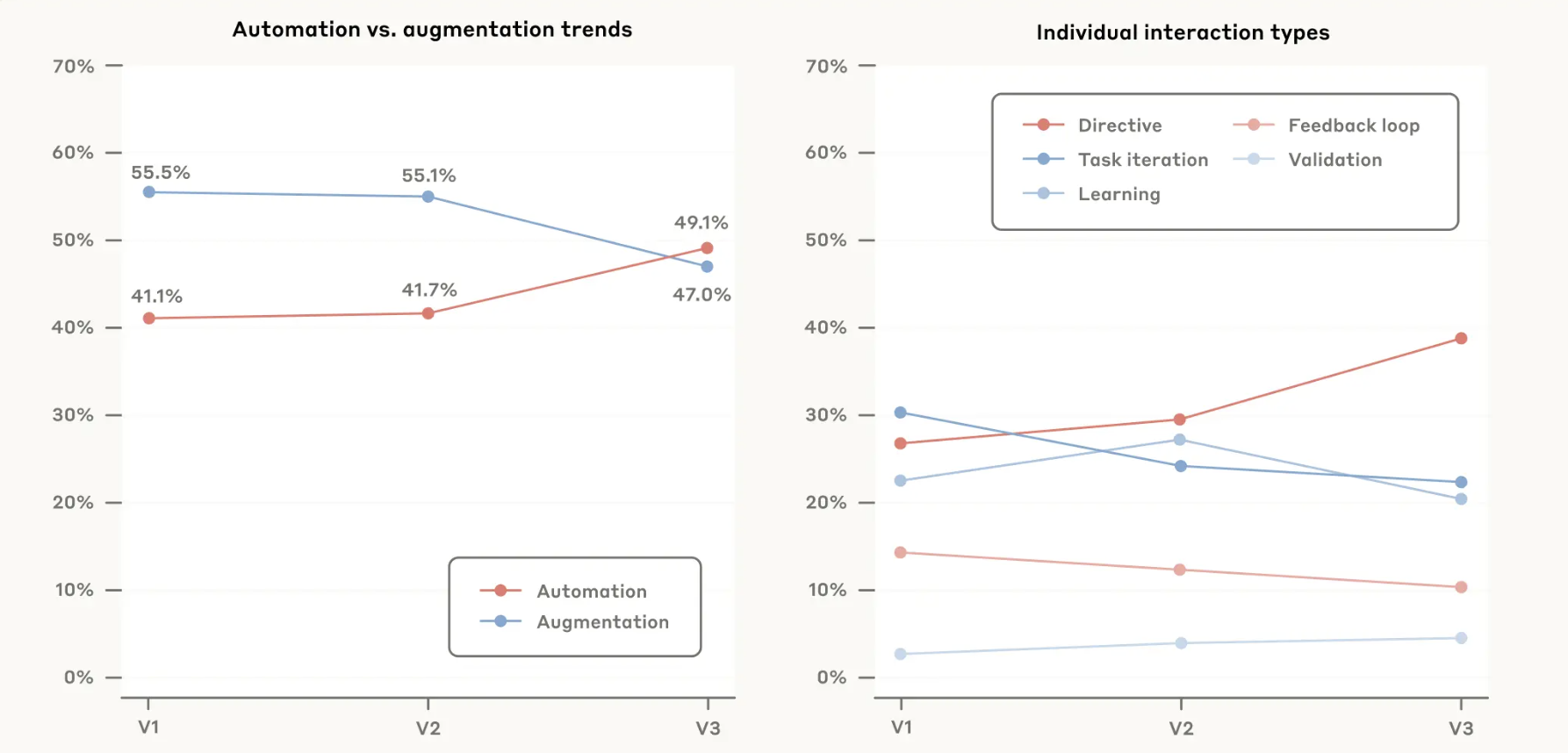

• Anthropic’s split: On the consumer side, Claude is used for code, education, and science - again, areas where mistakes are tolerable. But in the enterprise API channel, 77% of usage is automation. Here, companies are already letting AI handle entire workflows.

This is the paradox: adoption is fast, but trust is shallow. Consumers happily experiment, but stop short of full delegation. Companies, with data pipelines and oversight, leap further.

At Predli, this is the gap we see every day: organizations want the productivity gains of automation, but only if they can trust the system. That means building AI that is transparent, explainable, and aligned with real business data - not just generic models.

Paradox 2: The biggest users today may not be the biggest winners tomorrow

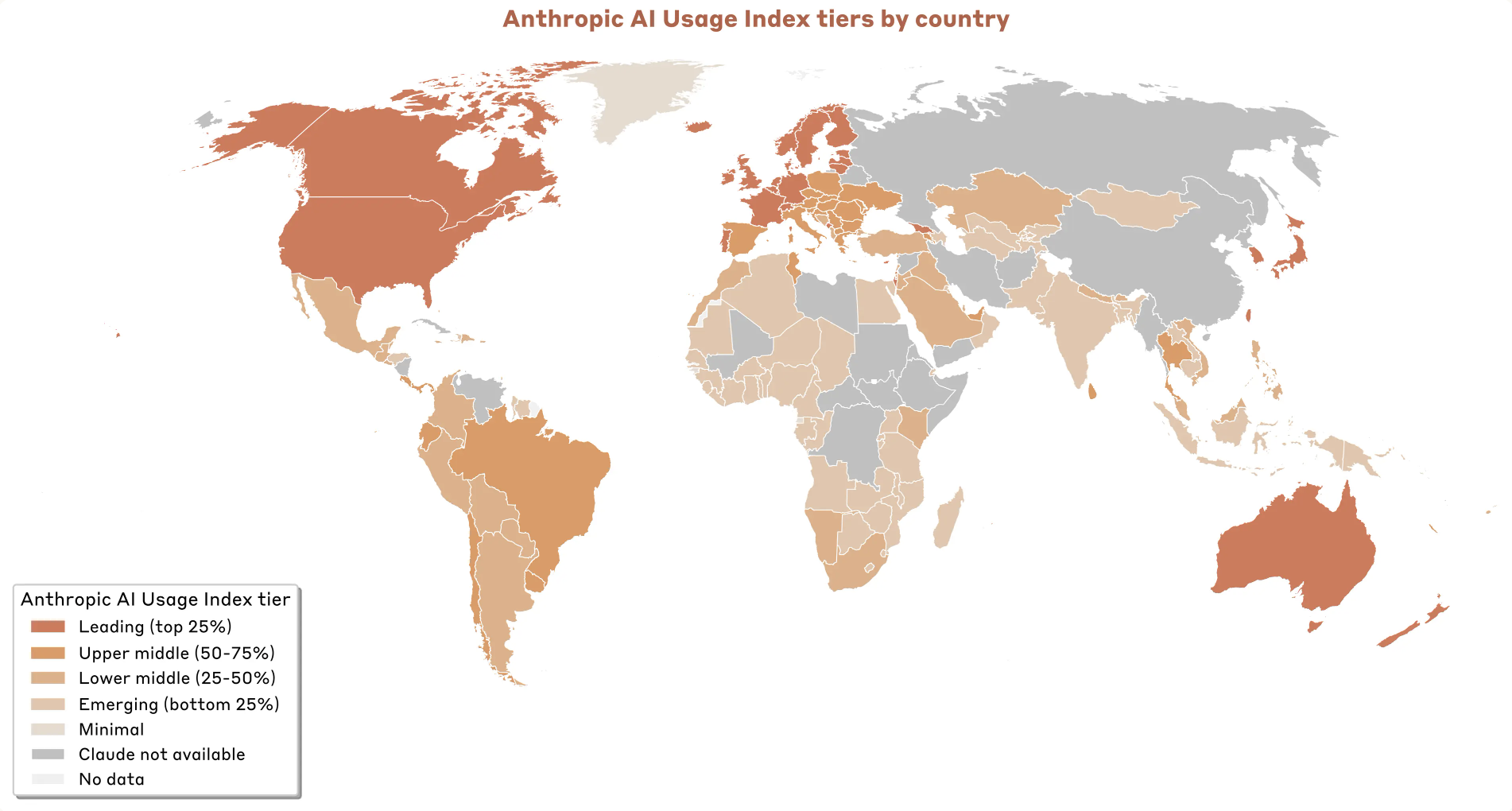

Anthropic’s Economic Index highlights a clear correlation: AI usage per capita rises with GDP per capita. Wealthy nations like Singapore, Israel, and South Korea are far ahead. Middle- and low-income countries lag behind, sometimes dramatically.

At first glance, the story looks familiar: technology reinforces inequality. Rich countries pull further ahead.

On the other hand, OpenAI's report complicates the picture. It shows that usage is growing fastest in low- and middle-income countries. The absolute per capita is lower, but the growth trajectory is steep.

What does this mean? That the future map of AI leadership may not simply mirror today’s GDP rankings.

History offers parallels. Mobile phones leapfrogged landlines in much of Africa. Digital payments spread faster in India than in Europe. When barriers fall, latecomers sometimes innovate faster.

AI may follow a similar path - but only if infrastructure, education, and policy allow it. That’s the critical hinge: technology doesn’t spread evenly, it spreads where systems allow it to take root.

Predli’s work with clients often reflects this dynamic. The challenge isn’t just “access to AI” - it’s building the right infrastructure around it so it can deliver value. Whether in advanced economies or emerging markets, the winners will be those who manage to integrate AI into real workflows, not just experiment with it.

Paradox 3: AI feels personal - but its future is political

If you want to glimpse the future of work, look at the lecture hall, not the boardroom.

Neither report highlights students as a category, but the fingerprints are everywhere.

• OpenAI shows that writing and information seeking dominate non-work use. It’s a safe bet that a large share comes from students.

• Anthropic shows that educational and scientific tasks are both climbing as a share of total usage.

For students, AI is not “the future”. It’s just homework. They are normalizing it in ways that will ripple outward for decades. When this generation enters the workforce, they won’t debate whether AI belongs in daily workflows. They’ll ask how anyone ever worked without it.

But here’s the paradox: while adoption feels deeply personal - homework, essays, study help - the trajectory of AI will be decided in political arenas.

• Singapore and South Korea: treat AI as national infrastructure. They pair clear guidelines with heavy investment - and usage per capita soars.

• The U.S.: let the market lead. This has unleashed explosive growth, but with less consumer protection and more ethical gray zones.

• Europe: bets on regulation-first. The AI Act aims to build trust and safety, but risks slowing startups under compliance burdens.

The question is not simply who builds the best model. It’s who writes the best rules of the game.

This is also why Predli emphasizes not only technology, but governance. Deploying AI responsibly means aligning with regulation, ensuring fairness, and preparing for future standards - because the real barrier to adoption is often not capability, but compliance and trust.

Where this leaves us

OpenAI and Anthropic’s reports paint a picture that is both exhilarating and unsettling.

• Adoption is faster than any technology in history - but trust remains fragile.

• Wealthy nations dominate usage today - but tomorrow’s growth may come from elsewhere.

• AI feels like a personal tool - but its future depends on political choices.

These paradoxes matter because they reveal the stakes of the moment. AI isn’t just about what machines can do. It’s about what people are willing to delegate, what societies are willing to invest in, and what rules governments choose to write.

At Predli, we see this intersection every day: the speed of adoption, the fragility of trust, the uneven playing field. Our mission is to help organizations navigate these paradoxes - turning AI from a fast-moving trend into a sustainable source of value.