AI Cost optimization: Token Tariffs and the Case for Custom Tokenizers

As language becomes the new interface for software, it also becomes a measurable cost driver. Large language models translate text into tokens; a small unit of meaning that an AI can interpret and process. Understanding how tokens work is now central to managing both the performance and economics of AI systems.

This new cost paradigm highlights a subtle but important shift: we’re no longer paying for computation alone, but for the expression of meaning itself. Two teams performing the same task, with the same model, might pay vastly different amounts depending on how they write, what language they use, and how efficiently they manage their context windows.

Optimizing this new unit of cost (the token) is becoming a defining capability for organisations using AI at scale.

From words to tokens: the invisible economy of text

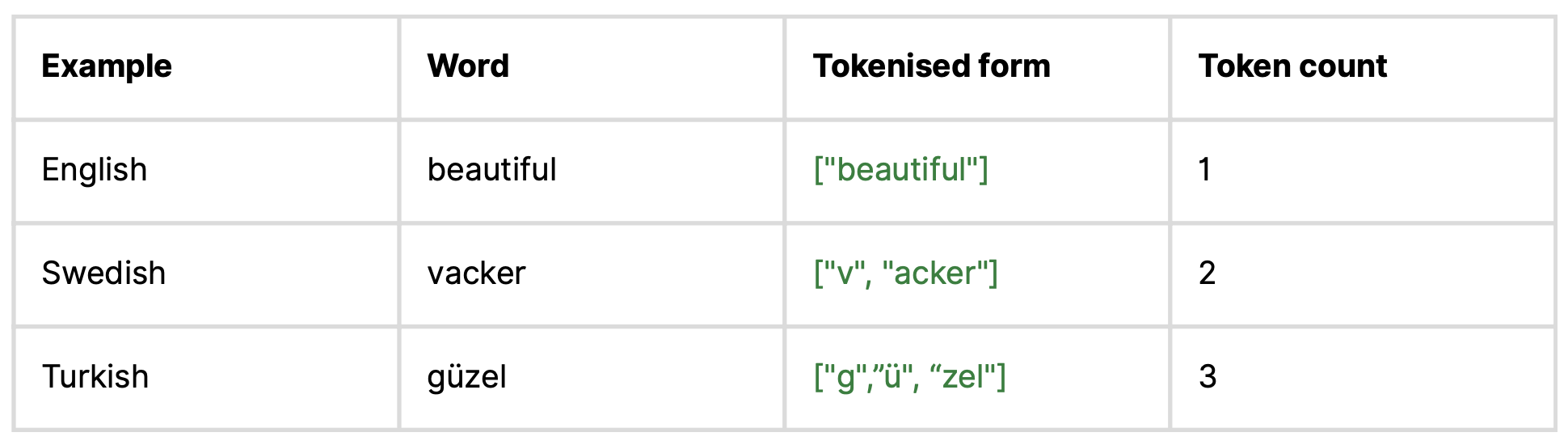

To a human reader, a sentence is made up of words. To an LLM, it’s made up of tokens; subword fragments generated through algorithms like Byte-Pair Encoding (BPE) or Unigram LM. These algorithms split text into smaller reusable chunks, balancing vocabulary size and generalization.

Even though all three words mean beautiful, Turkish requires roughly three times as many tokens to express it. When every 1,000 tokens are billed, that difference becomes a direct economic variable.

Token tariffs: the economics of meaning

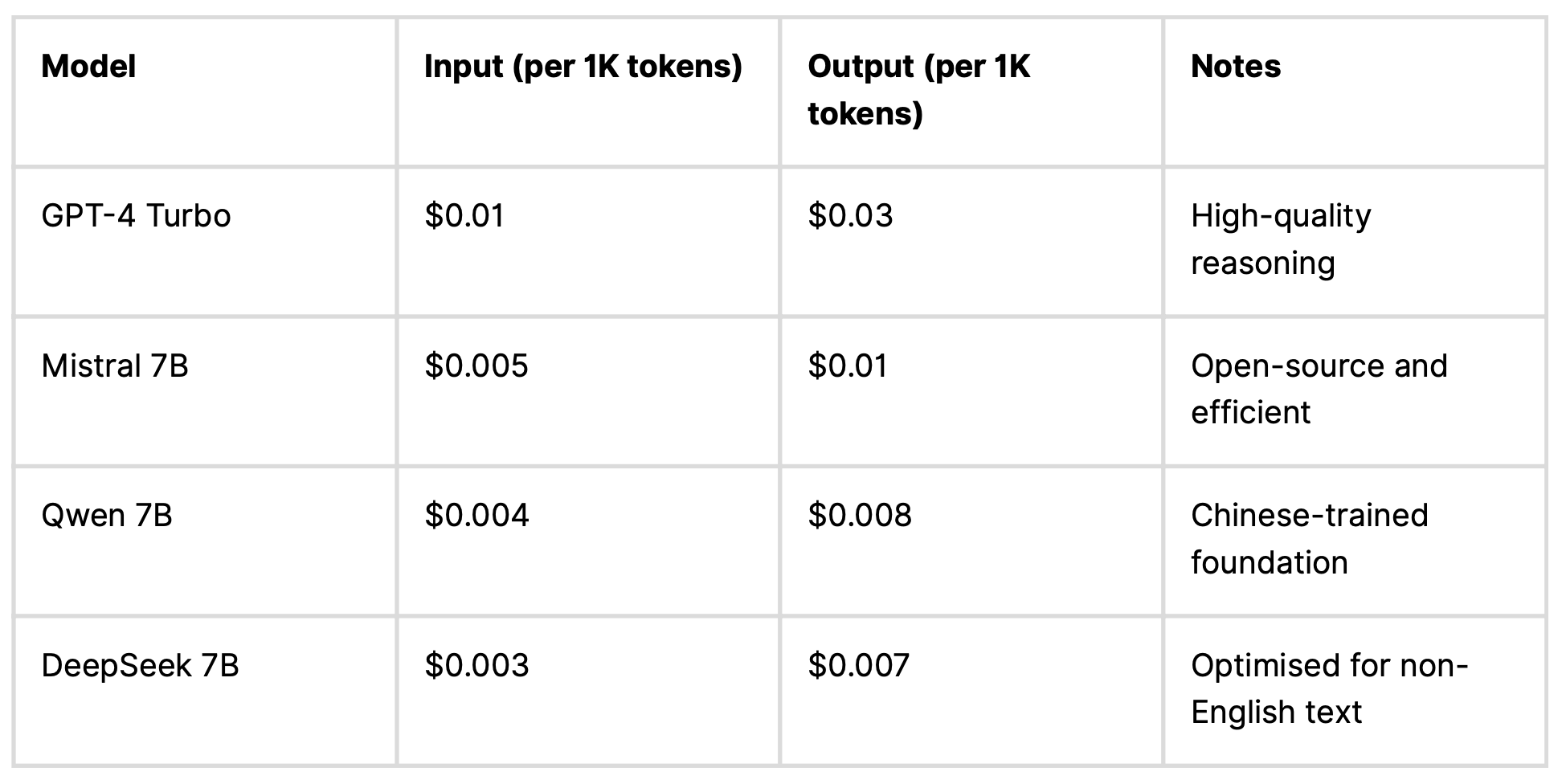

Every commercial LLM provider now operates with a price per token - a price per 1,000 tokens, often split between input and output.

At first glance, this seems simple: pay for what you use. But tokenization isn’t neutral, it depends on how the model splits your text, which varies by language and by model.

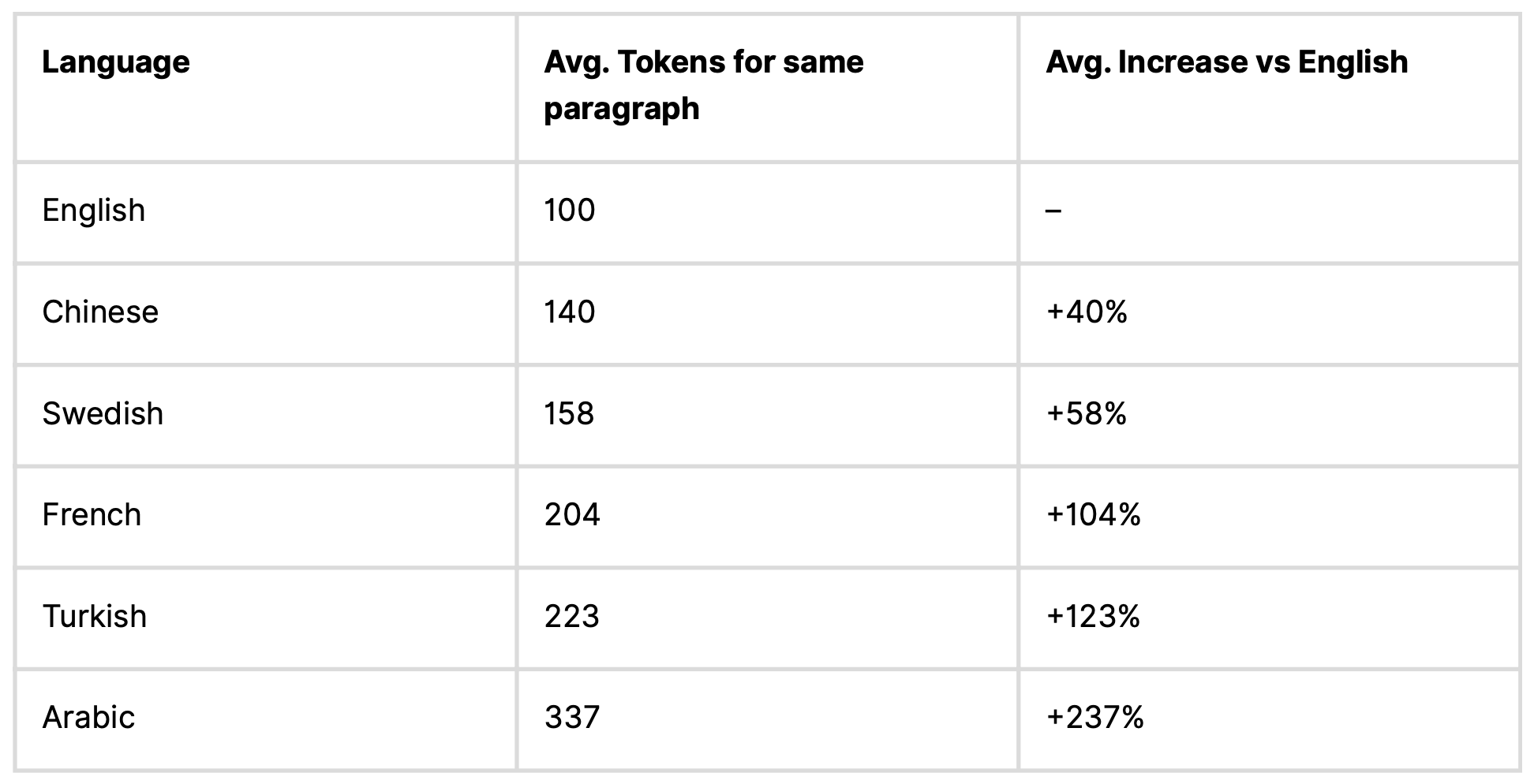

In our analysis, we compared how several open and commercial LLMs tokenize the same paragraph across five languages. The figures below represent the average increase in tokens compared to English across four models:

The same meaning can cost more than three times as much to process in Arabic as in English; a clear sign of structural inefficiency in multilingual AI.

This “token tariff” reveals an underlying economic bias: models trained primarily on English represent English text more efficiently. For global organisations, this means that multilingual operations, in areas like customer support, localization, and analytics, can carry an invisible cost penalty depending on the language used.

💡 Did you know?

Even models with large 1M token windows reach their limits faster in languages that consume more tokens per sentence. Less usable context means more summarization, degraded reasoning quality, and higher cost.

Why token inefficiency exists

The root cause lies in how tokenizers are trained. Most large language models are trained on English-dominant datasets: web pages, books, forums, and code. During tokenizer training, algorithms merge the most frequent character pairs into stable subword units. Because English patterns dominate this data, English text is encoded with higher efficiency.

Languages with richer morphology, such as Turkish, Arabic, Swedish or French, generate far more unique word forms. The same root word can appear in dozens of variations, making it harder for tokenizers to learn compact, reusable representations. The result is a structural inefficiency built into the model itself: non-English text expands faster in tokens, costs more to process, and fills up the model’s context window more quickly. Over time, a linguistic bias has evolved into an economic one, where the language you use directly influences what you pay.

Not all models are equal

Our analysis shows that this inefficiency varies not only between languages, but also between models. Even when processing the exact same paragraph, token counts fluctuated sharply across architectures.

For instance, Arabic text required anywhere between +68% more tokens in Qwen to over +340% in DeepSeek, depending on how efficiently each tokenizer handled the script.

.png)

This variation highlights that token inefficiency isn’t just a linguistic issue - it’s a design issue. Models trained on broader multilingual datasets, like Qwen, tend to encode meaning more compactly, while English-heavy tokenizers, such as those used by OpenAI and DeepSeek, still inflate non-English text significantly.

Our analysis shows that token efficiency depends as much on architecture and training data as on language itself; a reminder that the economics of AI are, at their core, shaped by design choices.

The hidden layers of token cost

Token tariffs don’t exist in isolation; each additional token also consumes compute, memory, and latency. Over millions of requests, these invisible costs can quickly become significant.

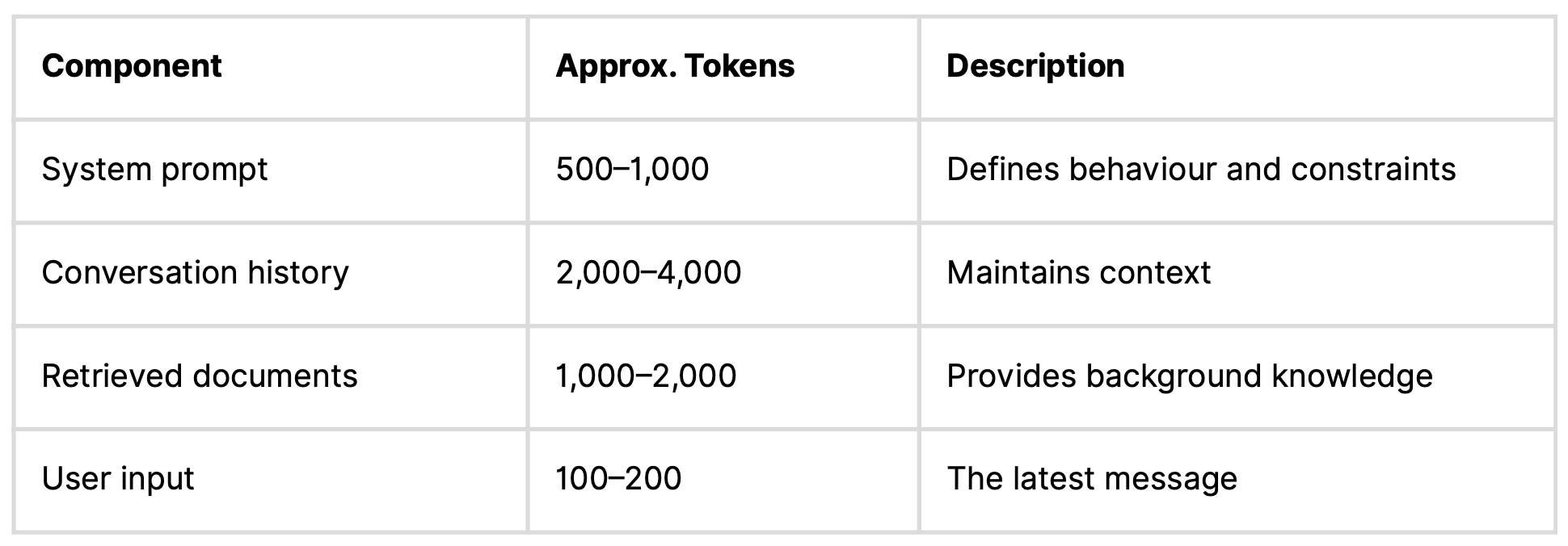

A typical chatbot interaction might include:

Before the model even starts generating, a single query can already reach 5,000–10,000 tokens. At production scale, these hidden costs can outweigh even model choice in total spend.

Token optimization as an engineering discipline

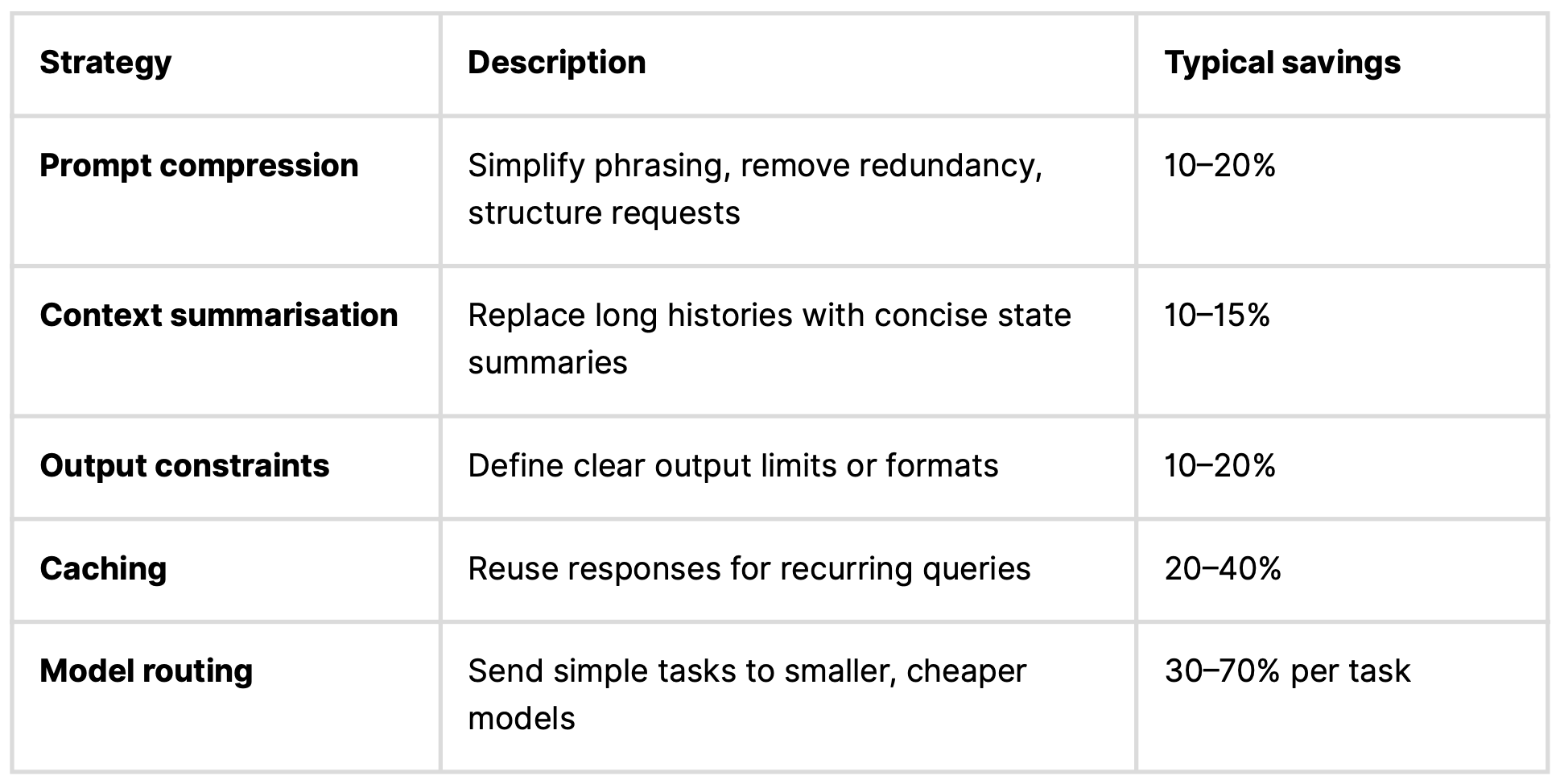

The first step toward optimization is visibility. You can’t optimize what you don’t measure, yet many teams still lack metrics for token usage per request, user, or feature. Once you know where your tokens go, several strategies can make a meaningful difference:

Together, these methods can reduce total token usage by 30-50% without any visible loss in quality. At scale, that’s often a larger saving than switching providers or models.

Beyond optimisation: custom tokenizers as strategic leverage

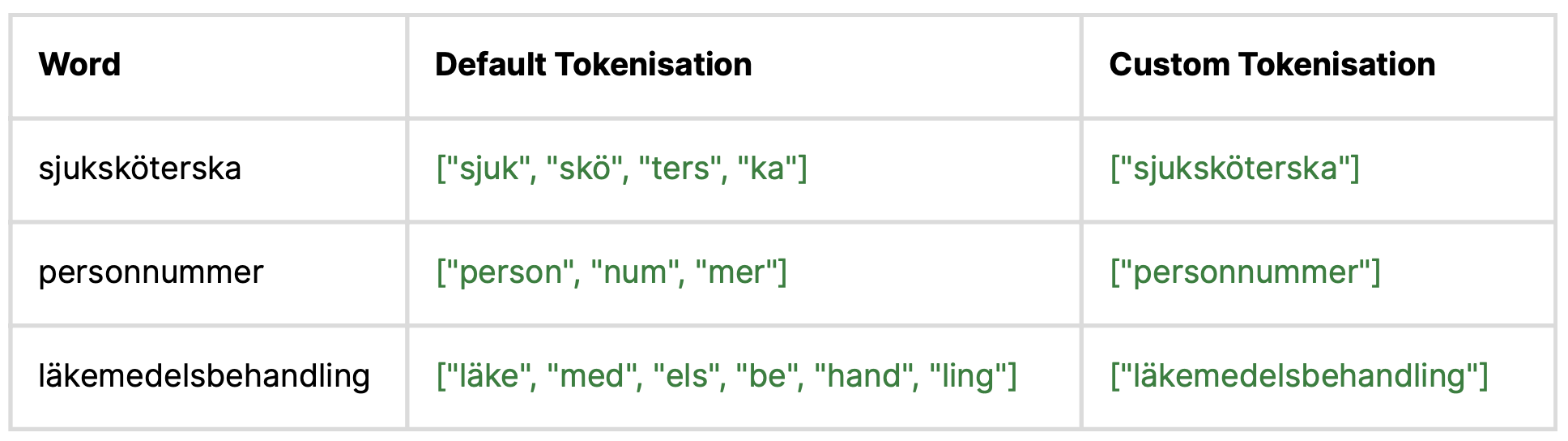

Prompt engineering and model routing address surface-level efficiency, while custom tokenization tackles the issue at its core. A custom tokenizer is trained on your own corpus (your company’s data, your customers’ language, your domain-specific vocabulary) and learns to treat frequent or specialised terms as single units rather than splitting them apart.

For example, in Swedish healthcare data:

Depending on the language and domain, this can reduce total token counts by 10-40%. For self-hosted or fine-tuned models, this translates directly into lower compute costs and faster inference.

Even when using managed APIs, a custom tokenizer can serve as a pre-processing layer; a form of intelligent compression that shortens input before it reaches the model. That can be as simple as replacing recurring phrases with placeholders or using reversible shorthand mappings for common structures.

The goal is the same: transmit meaning more efficiently.

A realistic cost scenario

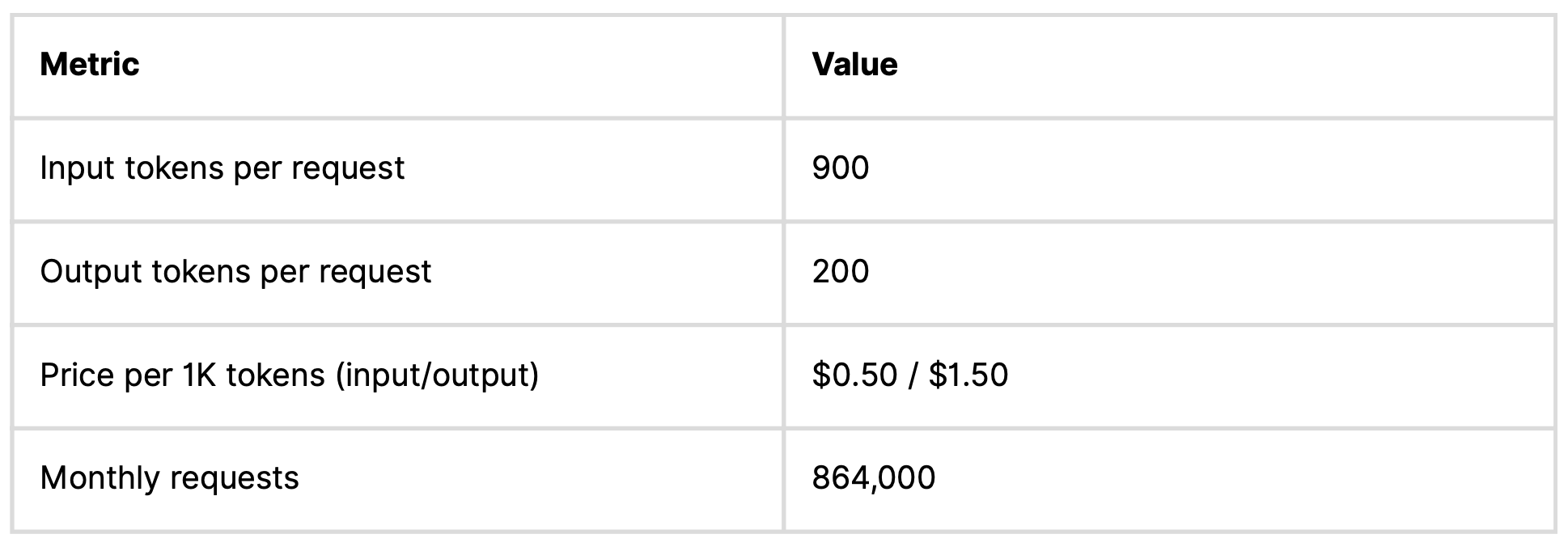

Let’s consider a multilingual support assistant handling 20 requests per minute - about 864,000 per month.

Base cost:

(900×0.0005+200×0.0015)×864,000=$648,000

After prompt and context optimization (-40% tokens):

→ $375,000 per month

Add a custom tokenizer (-12% input tokens):

→ $350,000 per month

That’s nearly $300,000 saved monthly, purely through engineering efficiency, not through vendor negotiation or model downgrades.

Fairness, sustainability, and the road ahead

Token optimization isn’t only a technical discipline; it’s a fairness and sustainability issue.

If English remains the cheapest language to process, multilingual users effectively pay a premium for expressing the same meaning.

And as our analysis shows, this isn’t just about language. Even among the same set of models, token efficiency can vary by several hundred percent, depending on how each tokenizer was trained and optimized.

That means cost, and energy, are no longer fixed attributes of AI, but outcomes of design choices. Every unnecessary token consumes compute, memory, and power. Reducing them isn’t just about lowering bills; it’s about building greener, fairer, and more inclusive AI systems.

In the years ahead, we’ll likely see:

• Language-balanced tokenizers trained on more diverse language data.

• Dynamic token routing, where models adjust context length based on complexity.

• Meaning-based billing, aligning cost with semantic content instead of token count.

Until then, awareness and optimization remain the most powerful levers available. Measuring, reducing, and (where possible) customising your token strategy can deliver immediate, measurable impact.

Token efficiency isn’t just about saving money. It’s about designing AI systems that process language fairly, intelligently, and sustainably. In the economics of AI, efficiency scales faster than power, and understanding tokens is where that efficiency begins.