Predictions for 2026

The past year has changed how organisations approach AI. What once felt experimental is becoming operational, and the discussion around the technology has grown more grounded. Models continue to improve, but the more meaningful shift is in how they are being applied. Agents are entering real workflows, robotics is moving toward practical deployment, and the boundary between physical and synthetic work is thinner than it was even a year ago.

As we look toward 2026, the most important developments are emerging not from model performance alone, but from the convergence of hardware advances, new interfaces, infrastructure shifts and organisational adaptation. Progress and correction are unfolding at the same time: new capabilities mature while questions about scalability, governance and the value of certain investments become increasingly prominent.

In this article, our team at Predli outlines the developments we believe will shape AI in 2026, not as speculative trends, but as trajectories already visible in how leading companies build systems and organise their work. Taken together, they point to an AI landscape that is becoming more capable and more integrated, but also more uneven, demanding clarity and long-term thinking from the organisations that plan to rely on it.

The Interface Revolution: AI Beyond Chats

1. Voice and Vision as AI-First Interfaces

AI-native interfaces are beginning to move beyond the smartphone, with smart glasses emerging as a credible new form factor. Meta’s Ray-Ban smart glasses already support AI-assisted capture and real-time queries, while Google has re-entered the category with new smart glasses initiatives tightly integrated with its multimodal AI stack. At the same time, multiple companies (like OpenAI's hardware collaboration with Jony Ive’s design studio) across the ecosystem are launching vision-based AI systems that treat cameras as primary inputs rather than accessories.

These devices are increasingly paired with eye-tracking, gesture recognition, and environmental sensing, technologies pioneered by firms such as Tobii, that allow systems to infer intent without explicit commands. When combined with on-device and hybrid AI models, voice and vision together reduce interaction friction and enable interfaces that are context-aware by default.

The broader implication is a shift away from screen-centric interaction toward continuous, ambient computing. By 2026, AI-first interfaces are likely to be defined less by apps and menus and more by systems that listen, see, and respond in real time—reshaping how users access information and control digital environments.

2. Beyond Chat-First Interfaces: Workflows & Autonomous Agents

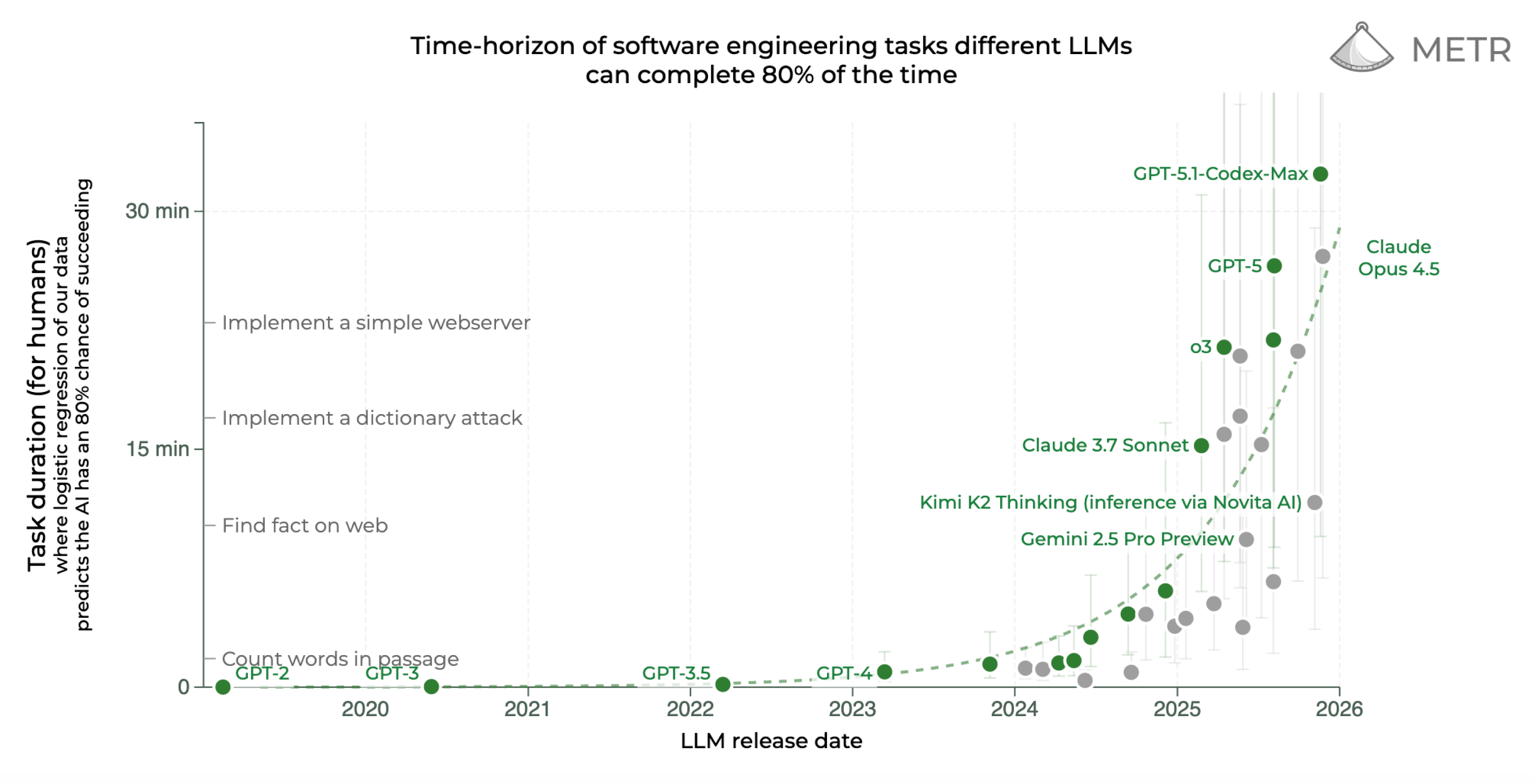

AI systems themselves are showing clear signs of maturation. One of the strongest indicators is the emergence of agents that can identify and resolve their own errors without human oversight. Early examples are already visible in models like OpenAI’s o1 and in agent systems demonstrated by Adept and Google DeepMind, which can retry tasks, analyse why an attempt failed, and adjust their strategy before trying again. As these agents gain access to real tools and real data, self-correction shifts from a useful enhancement to a core requirement, forming the foundation for AI systems expected to operate reliably in production environments.

Intelligent Machines in the Physical World

3. Robotics as the Leading Edge of Applied AI

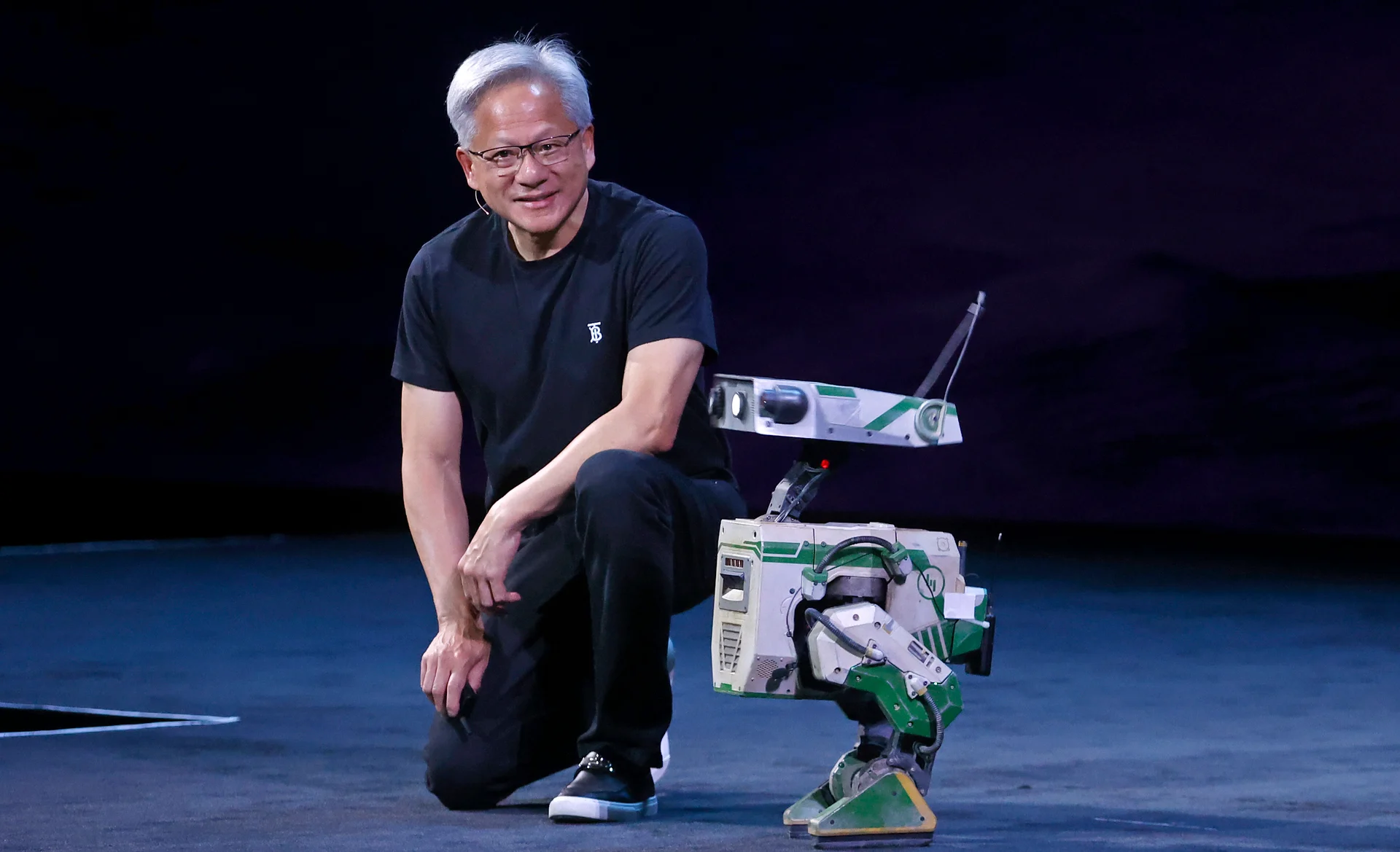

The most consequential advances in AI are emerging first in constrained, high-stakes environments, factories, warehouses, and defense systems, rather than consumer products. In industrial robotics, improvements in perception, motion planning, and real-time control are enabling machines to operate in semi-structured settings that previously resisted automation. These systems remain task-specific, but they are proving reliability at scale, generating the data, safety practices, and economic justification needed to expand into broader domains.

Military robotics reflects a similar pattern. Autonomous drones, surveillance platforms, and ground vehicles are advancing rapidly due to their tolerance for narrow objectives, constrained environments, and high investment levels. While these systems are not “general intelligence,” they are accelerating progress in navigation, multi-sensor fusion, and autonomous decision-making under uncertainty - capabilities that will directly transfer into civilian robotics.

4. From Narrow Systems to General-Purpose Consumer Robots

Consumer robotics is not advancing independently; it is downstream of these industrial and military breakthroughs. What is changing now is the gradual convergence of perception, reasoning, and action into unified systems that can generalize across tasks rather than execute a single programmed behavior. This shift is already visible in early humanoid and mobile robots, such as 1X’s NEO Robot, which still rely on narrow competencies but increasingly share common control stacks that can be adapted across environments.

This shift marks an important transition: from robotics as collections of specialized tools to robotics as adaptive platforms. While general-purpose consumer robots remain limited and expensive, the trajectory is clear. As industrial and defense systems mature, the boundary between “specific” and “general” robotics will continue to erode, bringing AI out of static prediction and into continuous, embodied action.

Open-Weight Models Catching Up with Closed Models

5. Innovation Shifts from Centralised Vendors to Community Ecosystems

When the frontier is no longer controlled by a handful of companies, innovation patterns change. We already see this in the rapid progress coming from distributed efforts such as DeepSeek, the Llama open-source ecosystem, and research collectives like EleutherAI and LAION, all of which have produced breakthroughs that spread globally within days. Advances in training efficiency, from QLoRA to FlashAttention, also emerged from the open community rather than proprietary labs. As these dynamics continue into 2026, experimentation becomes faster, ideas circulate more freely, and the landscape evolves toward multiple viable approaches to intelligence rather than a single dominant architecture.

6. Open-Weight Models Achieving Performance Parity

The competitive landscape between open-source and proprietary AI models is shifting in a meaningful way. Through 2025, open-weight models such as Llama 4, Mistral Large, Qwen 2.5 and DeepSeek-V2 made rapid progress, narrowing the gap in reasoning, multimodality and efficiency, and in some domains, outperforming closed systems outright. These advances do not diminish the value of proprietary research, but they do change the balance of power. As high-performing models become widely accessible, technical capability becomes less of a differentiator, and the playing field moves closer to level.

.png)

The Next Phase of AI-Generated Reality

7. Instant 3D Worlds, Procedural Development, and Generative World Models

The convergence of generative models, world modeling, and real-time game engines is beginning to reshape the economics of interactive media. Beyond generating individual assets, AI systems are increasingly capable of learning and simulating coherent environments—so-called generative world models that capture spatial structure, physics, and temporal consistency. Recent demonstrations from Google Deepmind, NVIDIA’s AI toolchain, and platforms like Luma AI show pipelines that can produce rigged 3D assets, explorable spaces, and prototype-level worlds directly from text or visual prompts.

These workflows are moving beyond experimental demos toward early production use, particularly in games, simulation, and virtual environments. By 2026, generative world models are likely to make procedural development far more accessible—enabling small teams, and even individuals, to design interactive worlds that previously required large studios, extensive manual modeling, and long iteration cycles.

8. AI Verification Crisis and the Push for Provenance

As synthetic content becomes visually indistinguishable from reality, the need for trust infrastructure becomes unavoidable. We already see this taking shape: Google’s SynthID, Adobe’s Content Credentials and the broader C2PA standard provide cryptographic signatures and provenance metadata that travel with the file, while platforms such as YouTube and TikTok have introduced mandatory disclosure for AI-generated media. Regulators, particularly in the EU through the AI Act, are moving toward stricter requirements for labelling and traceability. These measures won’t eliminate synthetic media, but they will define clearer boundaries around what can be trusted and what platforms are accountable for.

Scientific Breakthroughs Powered by AI

9. AI-Driven Scientific Discovery and Programmable Medicine

AI is increasingly acting as a general engine for scientific discovery, not just a clinical tool. Advances in molecular prediction, exemplified by systems like AlphaFold 3 and AI-driven platforms from Recursion and Insitro, are enabling researchers to model proteins, interactions, and biological mechanisms with unprecedented precision. This shift is compressing the gap between hypothesis, simulation, and experiment, allowing biology to be explored in a more programmable and iterative way.

In medicine, these capabilities are beginning to translate into patient-specific interventions, particularly in oncology, where companies like Tempus and Caris Life Sciences already use AI-guided molecular insights in care decisions. Early personalized vaccine efforts from Moderna, Merck, and BioNTech point to a broader trajectory: therapies designed algorithmically around individual biology rather than population averages. If current trends hold, 2026 may mark a broader inflection point where AI-driven scientific models begin to systematically shape how new treatments are discovered, tested, and deployed across medicine, not just in oncology, but across complex disease domains.

The Physical Limits of AI Scale

10. AI Supply Chain Constraints Become Strategic Bottlenecks

As AI adoption accelerates, its limiting factors are shifting from algorithms to physical and human supply chains. Energy availability is emerging as a primary constraint, with large-scale training and inference placing sustained pressure on power grids and driving renewed interest in dedicated data-center energy infrastructure. In parallel, access to advanced silicon; GPUs, custom accelerators, and high-bandwidth memory - remains tightly coupled to a small number of manufacturers, making hardware supply both capital-intensive and geopolitically sensitive.

Beyond compute, AI systems depend on upstream resources that are increasingly scarce or concentrated. Rare earth minerals and specialty materials required for chip fabrication introduce additional fragility, while the global shortage of experienced AI researchers, systems engineers, and infrastructure talent continues to constrain execution more than model availability. By 2026, these supply-side factors are likely to play a decisive role in determining which organizations and regions can scale AI effectively, shifting competitive advantage from software alone toward integrated control of energy, hardware, and human capital.

Conclusion

Many of the developments outlined in this article point toward a more capable and deeply embedded form of AI. Agents will become more autonomous, interfaces more intuitive, models more accessible and synthetic media more pervasive. But alongside these advances, 2026 is likely to reveal which parts of the current momentum are durable and which are symptoms of a market moving ahead of its operational reality.

If the past few years have been defined by rapid expansion, the coming year may be defined by alignment - between expectations and outcomes, between ambition and the infrastructure required to support it. Some initiatives will mature into stable, high-value capabilities. Others will undergo natural correction as organisations shift from experimentation to measurable results. This adjustment is not a sign of decline; it is a sign of maturation. The field becomes sharper when speculation gives way to clarity.

In that sense, the "AI bubble" is not a rupture but a transition. It marks the moment when the noise begins to fade and the long-term work becomes visible. The companies that succeed through this shift will be those that approach AI not as a short-term opportunity, but as a system that demands rigor, integration and sustained investment. As the ecosystem recalibrates, the true advantages will belong to those who build intentionally, with a focus on reliability, real-world value and the organisational foundations needed to support what comes next.